Since the SVR is only able to interpolate between the existing data in the training dataset. We need to be sure that the features of the training set should suffice the domain that we are expecting.

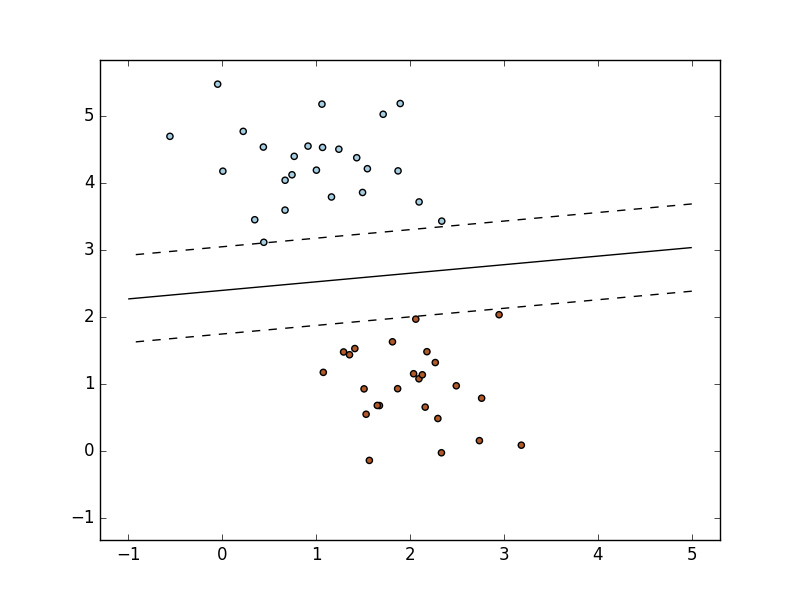

The training set consists of the samples that were collected on which we have to perform prediction. Create an estimator using the coefficients.Train machine to get the contraction coefficients α = α i.Selection of Kernel along with its parameters and any regularization if required.Support Vectors helps in determining the closest match between the data points and the function which is used to represent them.įollowing are the steps needed in the working of SVR: But since it is a regression algorithm instead of using the curve as a decision boundary it uses the curve to find the match between the vector and position of the curve. Given data points, it tries to find the curve. SVR works on the principle of SVM with few minor differences. Note that the classification that is in SVM use of support vector was to define the hyperplane but in SVR they are used to define the linear regression. The objective of SVR is to fit as many data points as possible without violating the margin. These data points lie close to the boundary. Support Vector: It is the vector that is used to define the hyperplane or we can say that these are the extreme data points in the dataset which helps in defining the hyperplane.

It is used to create a margin between the data points.Ĥ. Boundary Lines: These are the two lines that are drawn around the hyperplane at a distance of ε (epsilon). Type of kernel used in SVR is Sigmoidal Kernel, Polynomial Kernel, Gaussian Kernel, etc,ģ. To do that we need a function that should map the data points into its higher dimension. Kernel: In SVR the regression is performed at a higher dimension.

In SVR it is defined as the line that helps in predicting the target value.Ģ. Hyperplane: It is a separation line between two data classes in a higher dimension than the actual dimension.

0 kommentar(er)

0 kommentar(er)